Ai Trust, 2021

Ai TRUST Lab

An interdisciplinary research project of Hochschule für Gestaltung, Offenbach am Main and Center for Cognition and Computation Goethe-Universität, Frankfurt am Main

As part of the research project "AI Lab TRUST", core principles of human-AI interaction were developed, in which AI systems are able to understand contexts and provide transparency for users in their autonomous decisions. Particular focus was placed on the topic of "trust" in human-AI interaction, which plays a central role in acceptance of autonomous vehicles (AVs) in public transportation systems.

Table of Content

- First Approach of an Interdisciplinary Methodology

- Autonomous Interface Prototyping

- AV Behaviour Model

- Unified Trust Model

- Team

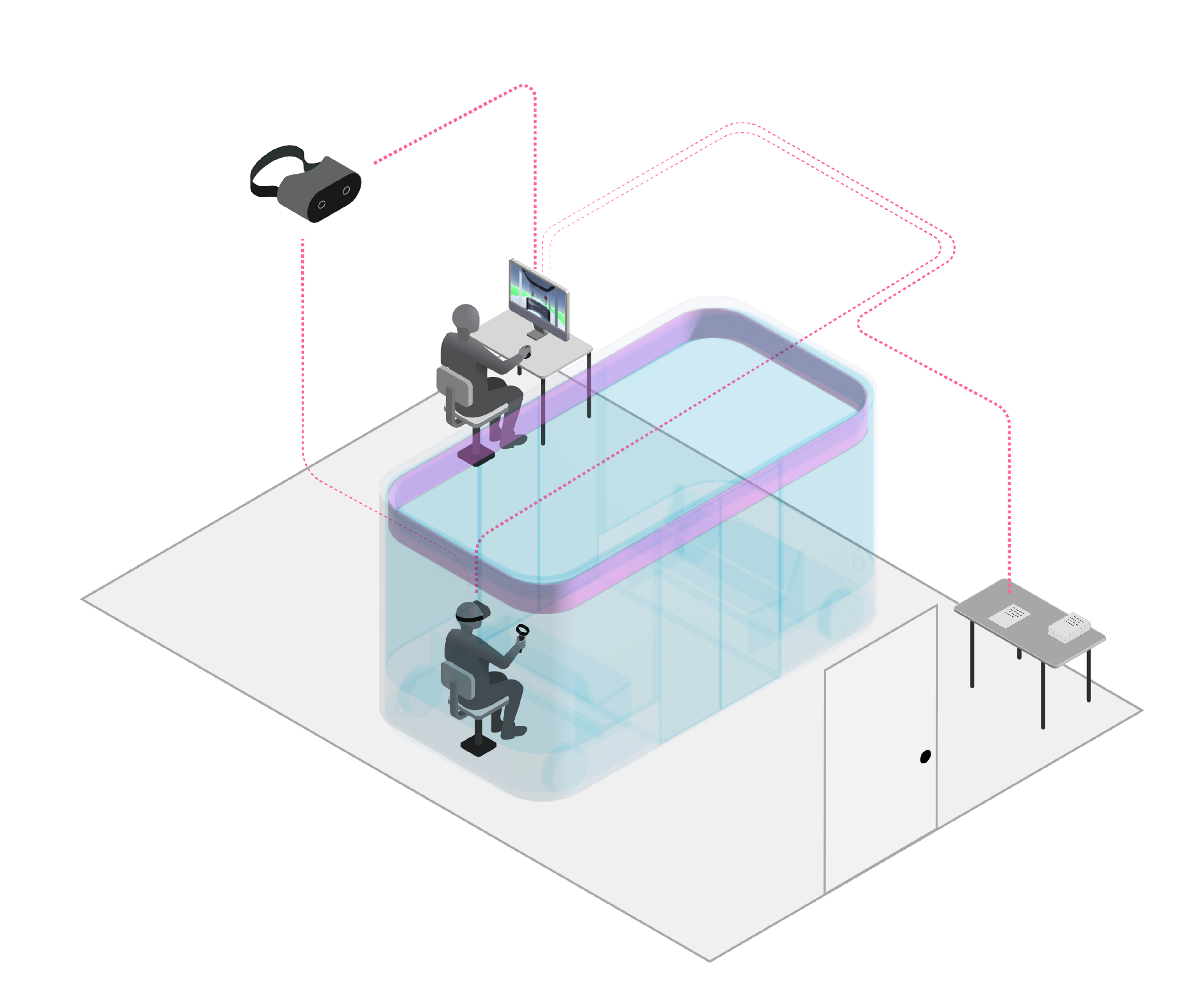

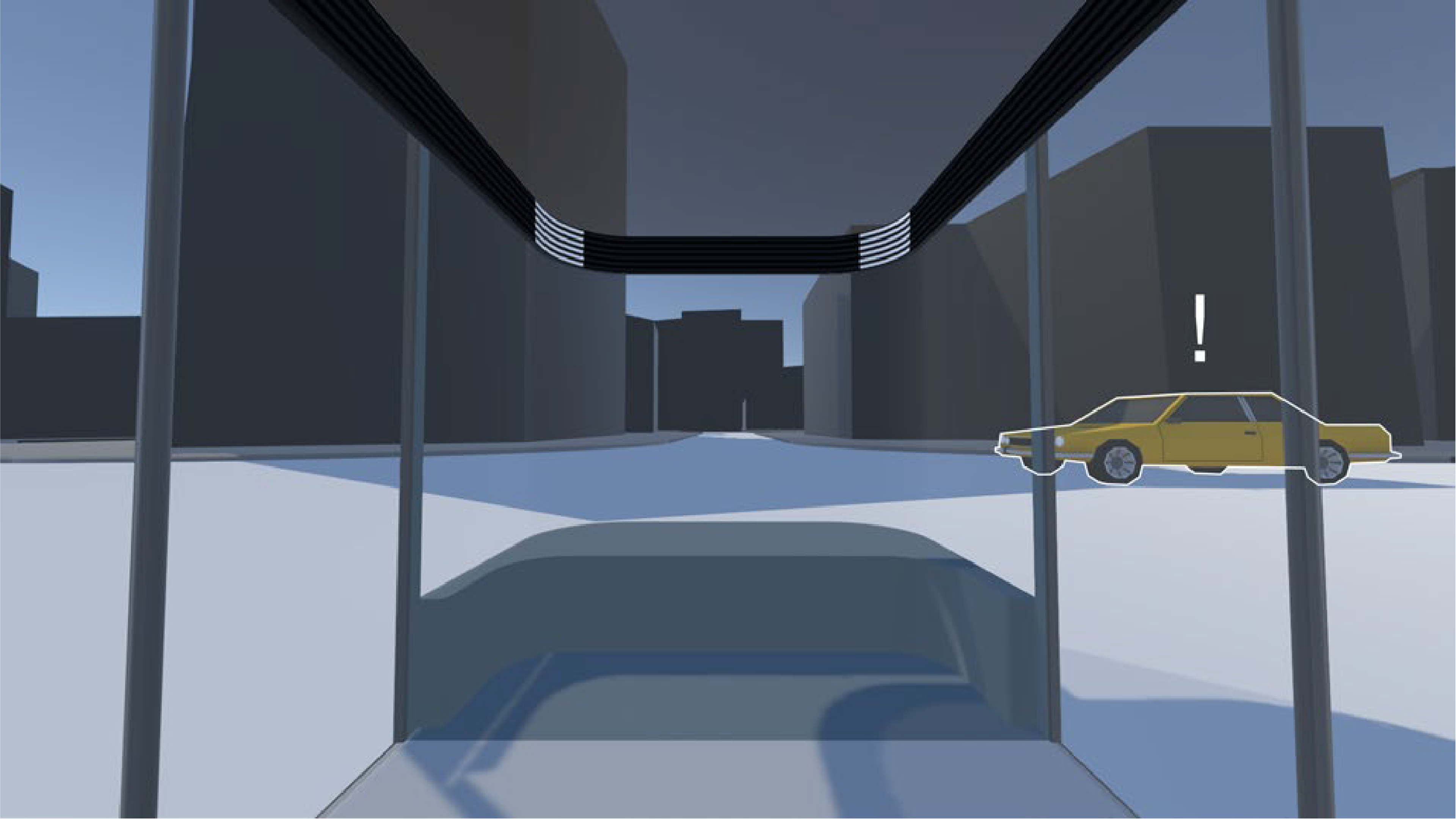

Formal experimental design: immersive VR environment & questionnaires

Formal experimental design: immersive VR environment & questionnairesFirst Approach of an Interdisciplinary Methodology

Approaches from design research and computer science were aligned and an interdisciplinary methodology was developed. The methodology serves as a guide for further research using computer graphic simulations and validation of interactive design elements in test situations.

- Phase 1 | Determining a specific traffic situation → Define Context

Define a specific traffic situation. The AV Behaviour Model can help to categorize traffic situations which could serve as a basis for a test scenario.

- Phase 2 | Determining situation-specific user needs → VR Pre-Test

Create a VR simulation for the specific traffic situation. By means of interactive qualitative questionnaires, the test persons can immediately provide information about their situation-specific user needs. At this point, the simulated shuttle is in a default mode and does not yet contain any design element. There is no communication between the human and the shuttle. In this way, we can approximate situation-specific needs. The trust model can help to formulate questions that target the situation-specific trust.

- Phase 3 | Design proposal → Designing prototypes

Based on the situation-specific user needs, a design proposal can now be drafted. The trust model and DIN EN ISO 9241 help to make design decisions. The default shuttle can be used to design prototypes.

- Phase 4 | Test design proposal → VR prototype test

The developed design proposal can now be integrated into the virtual environment from phase 2. A second test is performed, which aims to evaluate the design proposal and the resulting impact on trust. The trust model can help to formulate questions that target trust.

- Phase 5 | Development End-to-End Simulation → Automated Evaluation testing

Building on Phase 4, it is conceivable to merge the design proposal with AI methods to provide an end-to-end simulation. The space of possible feedback interactions grows with the complexity of the scenario. For representing complex communicative actions that need to be taken depending on the context (e.g. individual user needs), AI methods can help to select the best possible action. We believe that controlling interactive design elements through autonomous processes is possible.

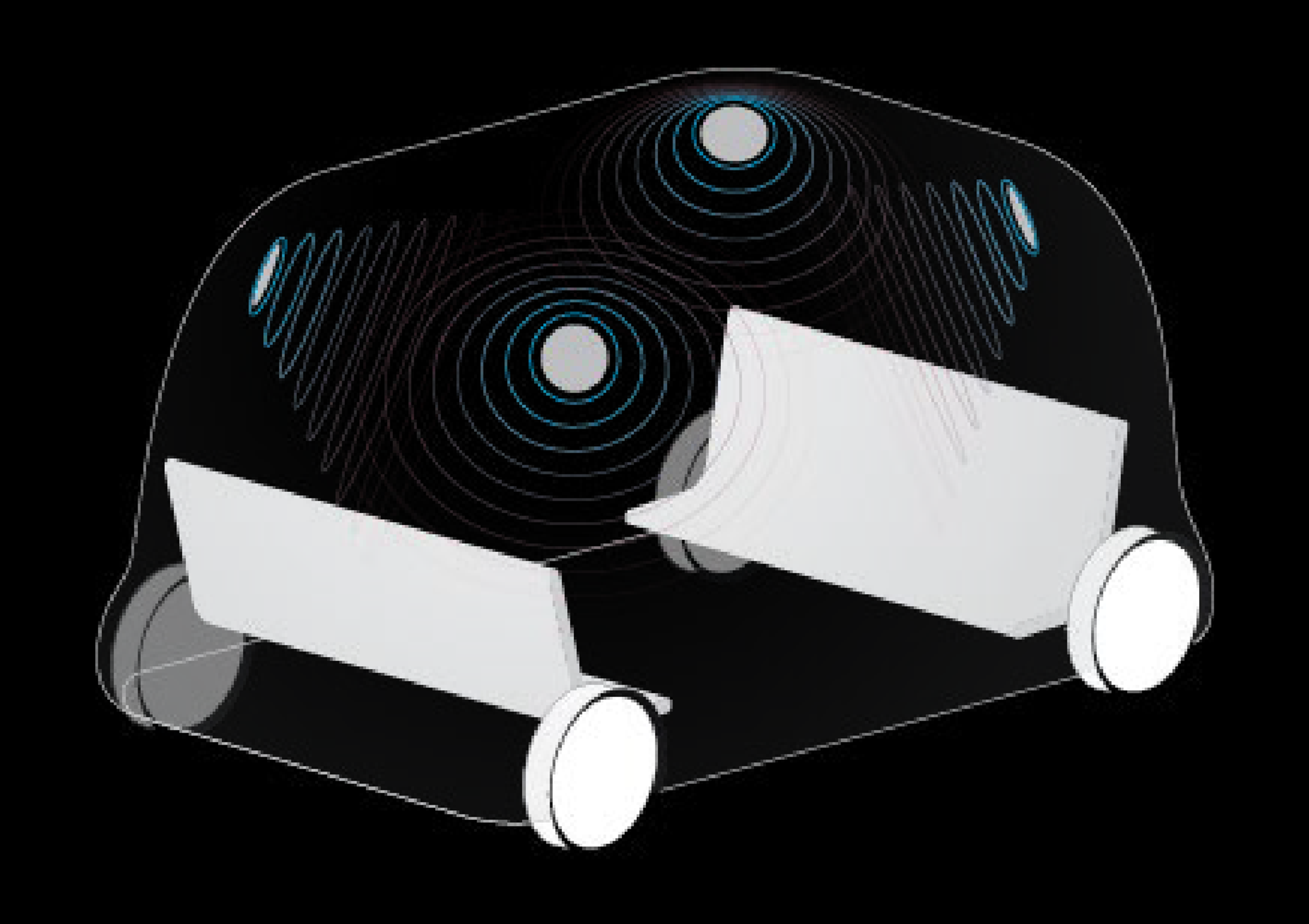

Autonomous Interface Prototyping

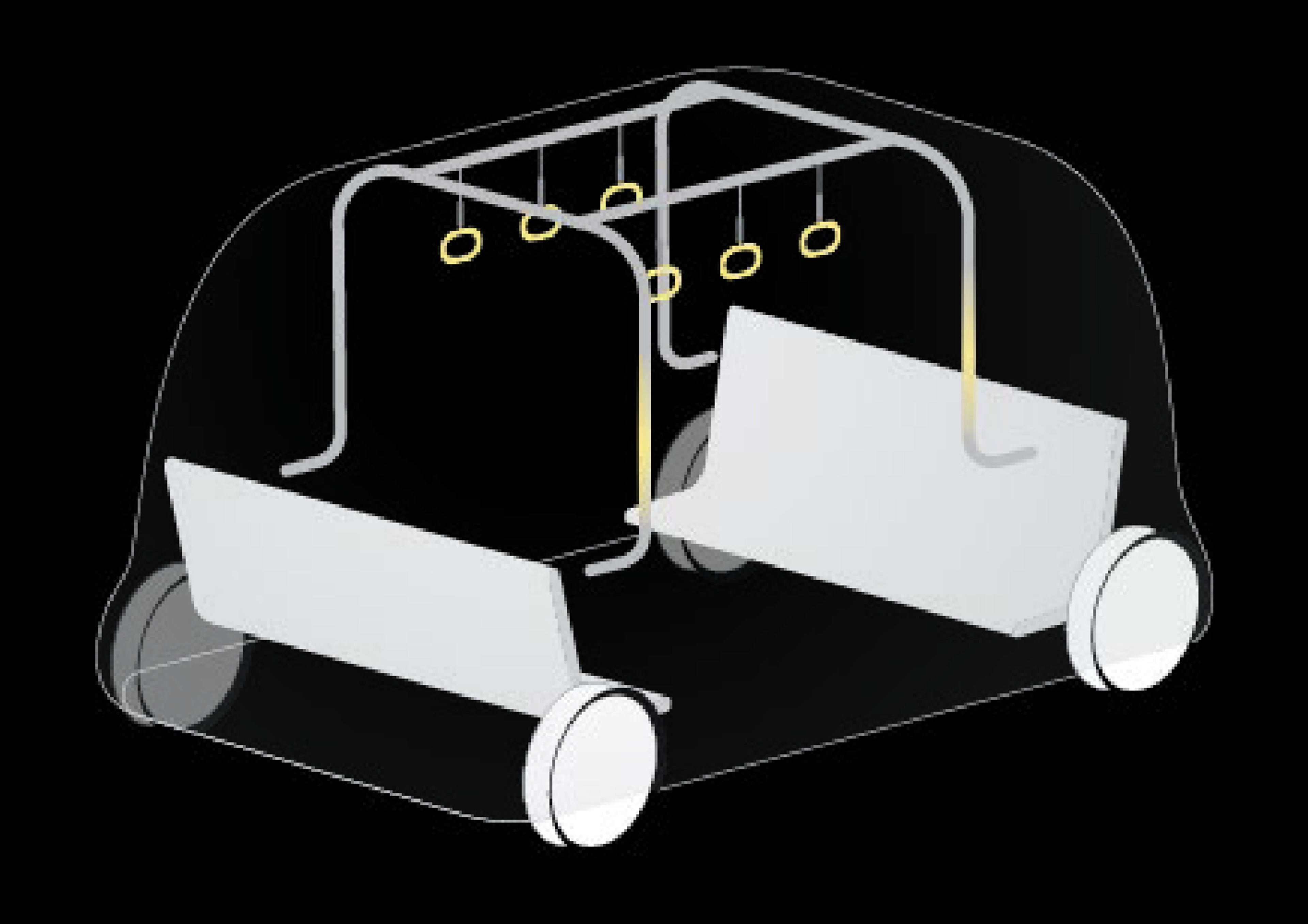

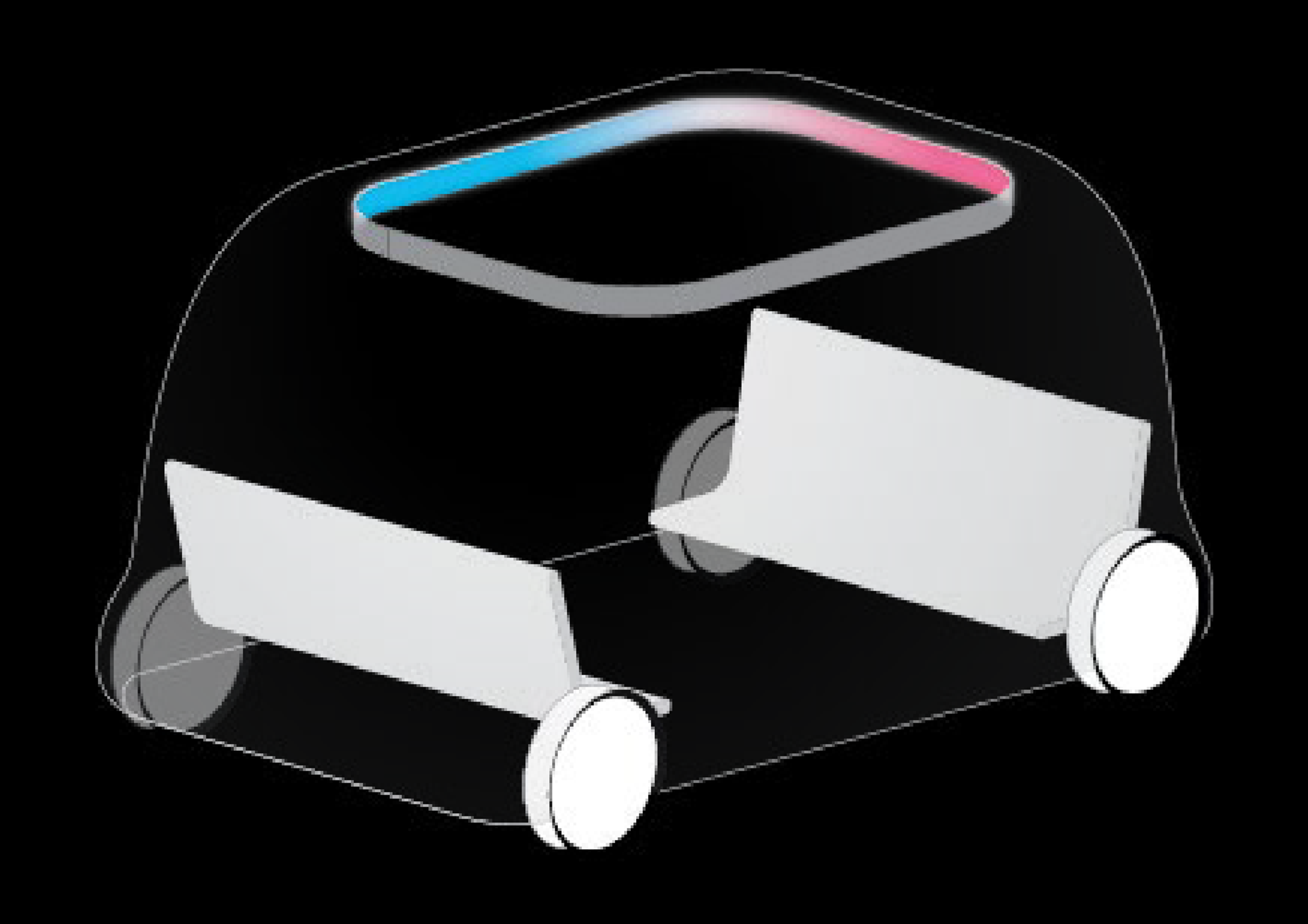

There are numerous ways to communicate with passengers. The question quickly arises as to which sensory channels should be addressed. In order to determine the advantages and disadvantages of haptic, auditory or visual communication, a detailed analysis of the situation-specific requirements must be carried out and user needs must be identified. Figures 2 - 4 illustrate possibilities available in an autonomous interior.

Auditive communication interface

Auditive communication interface Haptic communication interface

Haptic communication interface Visual communication interface

Visual communication interfaceFirst Design Proposal

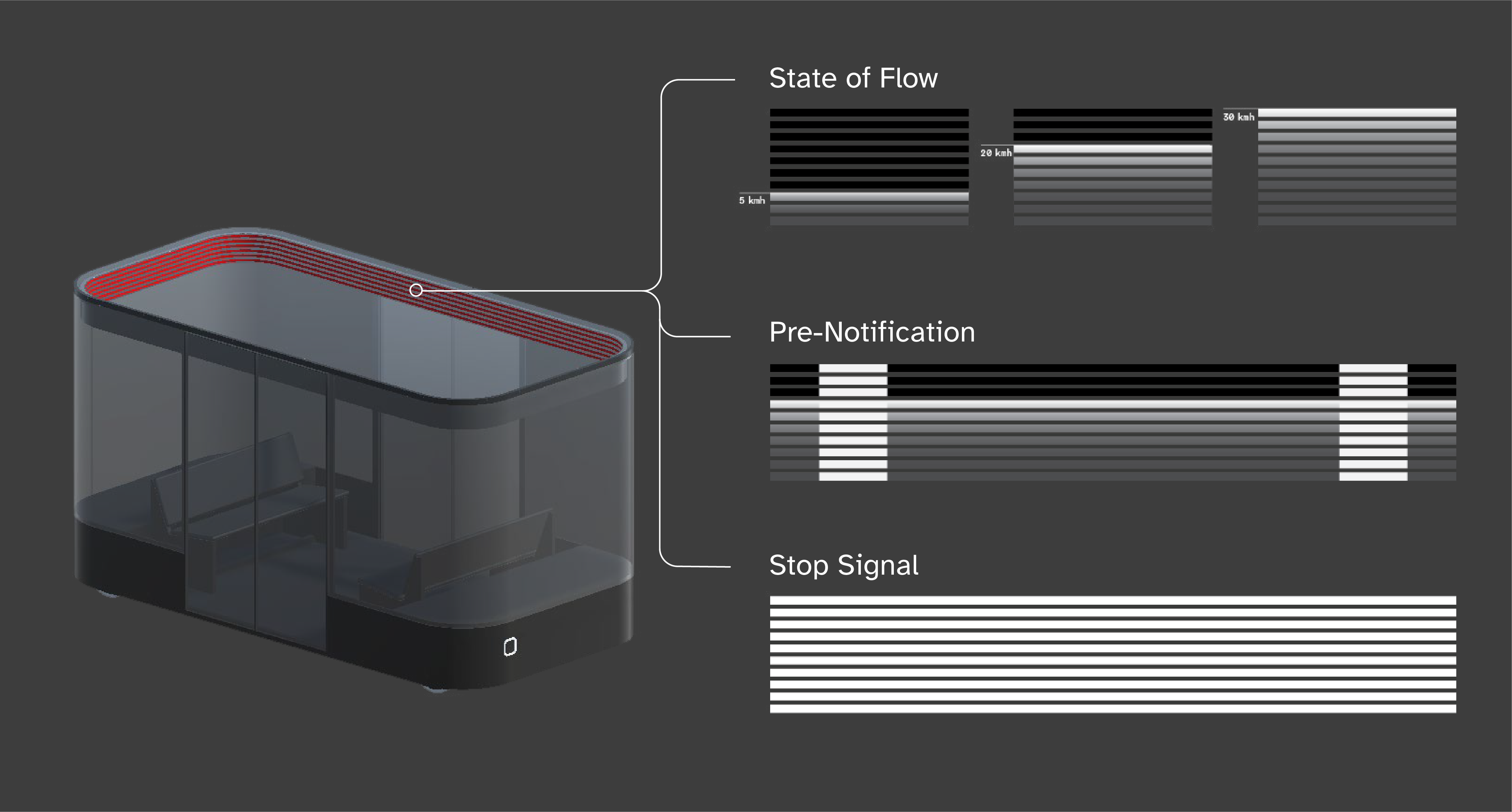

As a kickoff and first approach to the dynamics of an autonomous interior, we chose a ring display (fig. 5) that can be viewed from all directions. The display informs about driving speed and braking events by means of an advance warning.

Explainable AI can - under certain conditions - help passengers understand autonomous decisions made by the system and increase confidence. The reactions of the test subjects indicate that early notification in traffic behavior could help the passengers to react in time and to better anticipate a dangerous situation. Our challenge was to understand the characteristics of an autonomous interior and to find out whether a pre-notification in a sudden braking situation could contribute to shock absorption.

As an outlook into future prototypes, we could, for example, investigate the effects of adaptive lighting conditions, situational darkening of the glass panes or announcements of curves ahead.

Design Proposal: 360° visible display and its function in flow state and discuption state

Design Proposal: 360° visible display and its function in flow state and discuption stateAV Behaviour Model

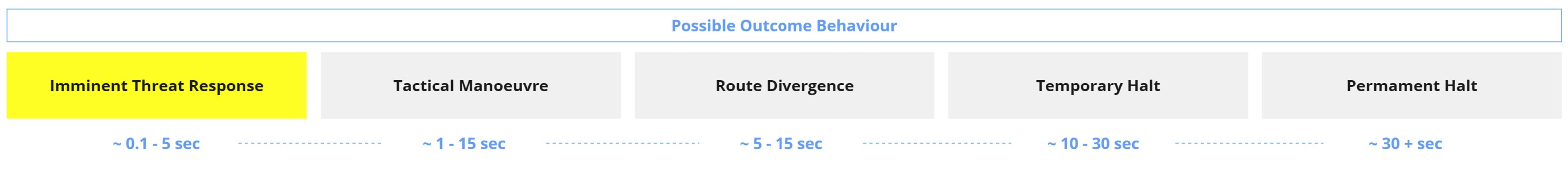

Based on the Framework for Testable Cases and Scenarios of Automated Driving Systems (Thorn et al. 2018), an AV Behavior Model was created that includes the following 5 categories for possible autonomous behavior scenarios: (1) immediate threat response, (2) tactical maneuver, (3) route deviation, (4) temporary stop and (5) permanent stop.

Context of autonomous system behaviour

Context of autonomous system behaviourEach of these behavioral scenarios represents a potential starting point where trust toward the autonomous vehicle is tested and may be supported by appropriate design parameters.

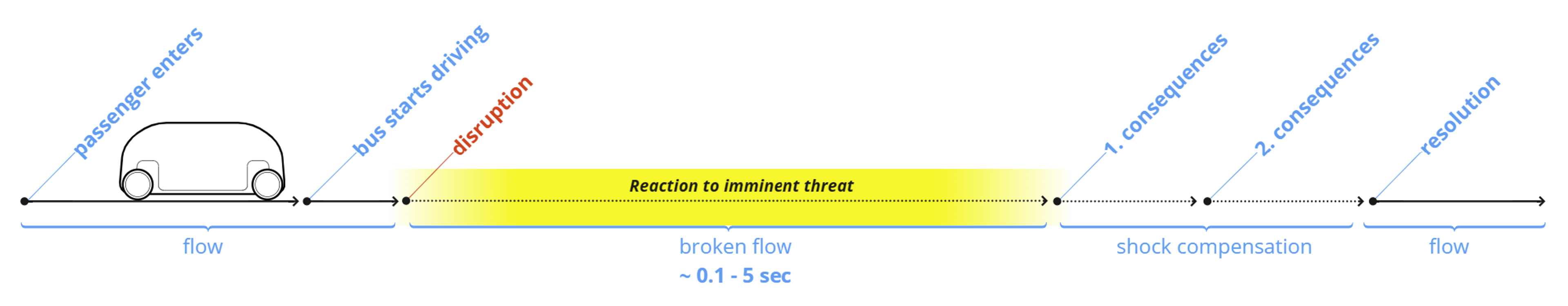

For the experiment, an immediate threat response was simulated. The reaction refers to an autonomous decision that is immediately implemented in an action period of approximately 0.1 to 5 seconds. This period includes an abrupt, unpredictable event and an immediate reaction. It is an unpredictable and immediate break in driving behavior, e.g. reaction to negligent road users.

Timeframe of reaction to imminent threat

Timeframe of reaction to imminent threat

Unified Trust Model

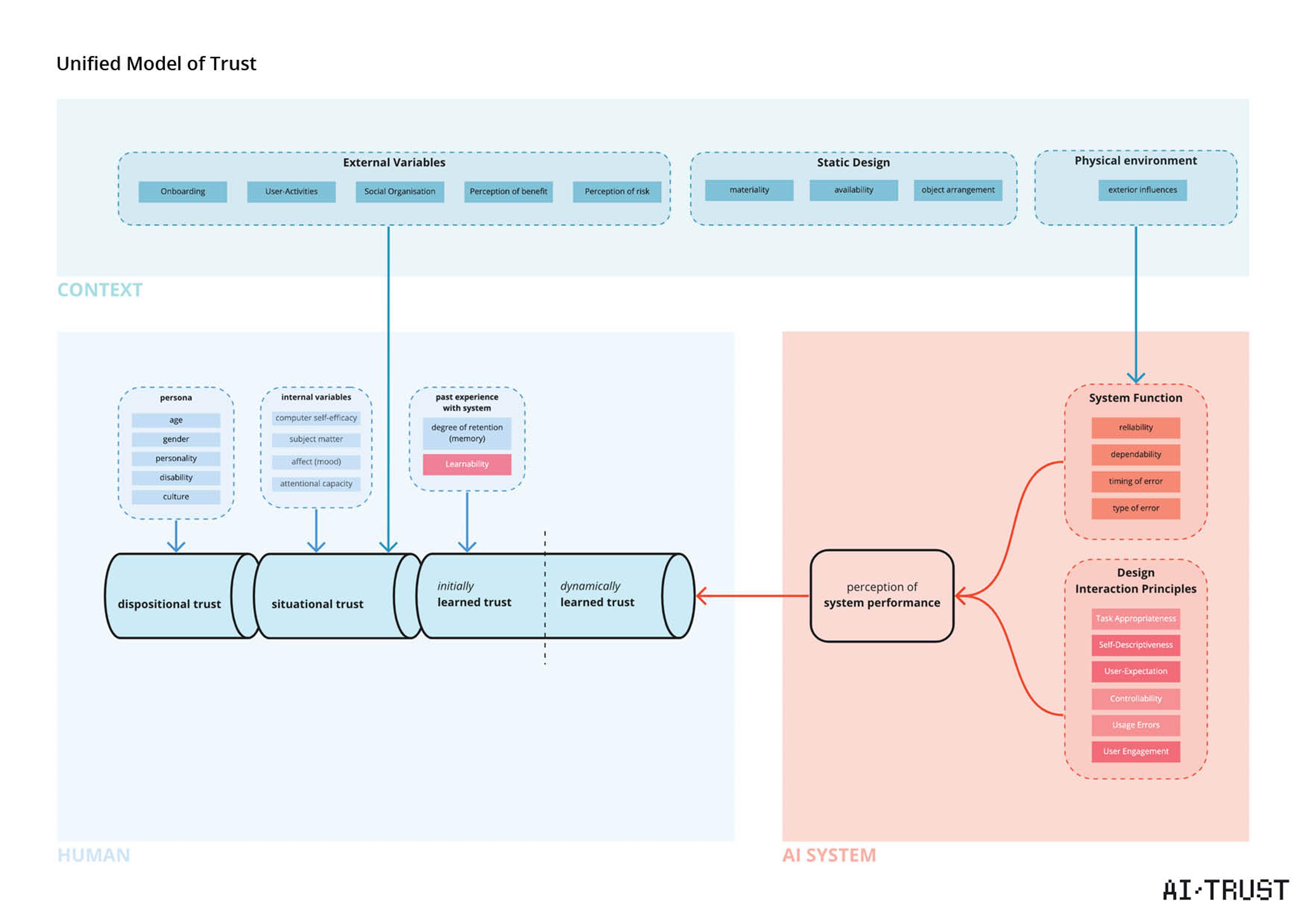

A trust model can be used to locate causal relationships of all trust factors within a human-AI interaction. It serves as an aid for evaluating the respective interaction principles on the overall trust of the passengers. The trust model can also be seen as a map in which we define the design action space in scenarios of a potential trust breach. It captures the dynamic nature of a user with an automated system. The model reveals the complexity of trust by incorporating three major sources of trust variability at the dispositional, situational, and learned levels of analysis.

Unified Model of Trust

Unified Model of TrustDispositional Trust

The situational level includes all factors that describe the influence of the situation. This includes external factors such as the environment, type of system or task as well as internal factors of the interactor such as self-confidence, expertise, mood or attentiveness regarding the vehicle and traffic situation.

Situational Trust

At the learned level are factors such as system expectations, especially those confidence factors that result from interaction with the human-machine interface (HMI). HMI design features such as self-descriptiveness, controllability, usability, etc. play an essential role.

Learned Trust

At the dispositional level, it is about the particular disposition towards an automated task or technology, independent of the context or a particular system. It is influenced by a person's culture, age, gender and personality traits, among others.

Team

Lead

Prof. Peter Eckart

Integrative Design

Hochschule für Gestaltung, Offenbach am Main

Prof. Dr. Kai Vöckler

Urban Design

Hochschule für Gestaltung, Offenbach am Main

Prof. Dr. Visvanathan Ramesh

Vision Systems

Center for Cognition and Computation Goethe-Universität, Frankfurt am Main

Researcher

Ken Rodenwaldt

Scientific Assistant

Hochschule für Gestaltung, Offenbach am Main

Armin Arndt

Scientific Assistant

Hochschule für Gestaltung, Offenbach am Main

Martin Klingebiel

Scientific Assistant

Center for Cognition and Computation Goethe-Universität, Frankfurt am Main

Leonard Neunzerling

Student Assistant

Hochschule für Gestaltung, Offenbach am Main